Author: FounderPark

The MCP protocol released by Anthropic last year has suddenly become the most popular protocol in the AI field this year due to the popularity of Manus and Agent. OpenAI, Microsoft, Google and other major companies have also supported the protocol, and Alibaba Cloud Bailian and Tencent Cloud in China have also quickly followed suit and launched a rapid construction platform.

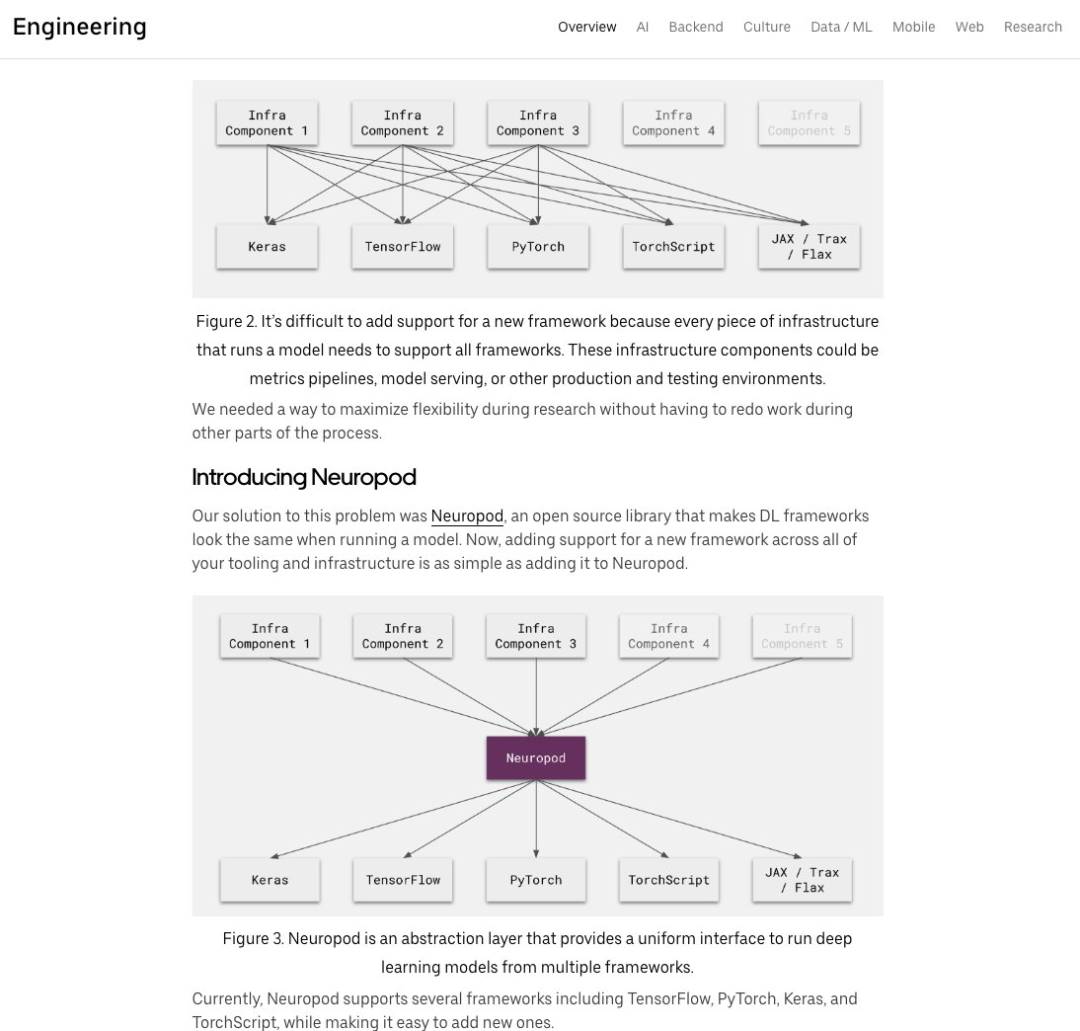

But there are also many controversies. Many people question that there is not much difference between MCP and API, that Anthropic's engineers are not very proficient in Internet protocols, and that there are security issues caused by the simplicity of the protocol.

It is only fitting that the inventor of the MCP protocol answer these questions.

In a recent podcast of Latent Space, they invited Justin Spahr-Summers and David Soria Parra, inventors of the MCP protocol from the Anthropic team, to talk in detail about the origin of MCP and their thoughts on MCP: why MCP was launched, how MCP differs from existing APIs, how to make better use of tools, etc. The information is very informative, so I recommend saving it for reading.

Introduction of the guests:

Alessio Fanelli (Moderator): Partner and CTO of Decibel

swyx (host): Founder of Small AI

David Soria Parra: Anthropic Engineer

Justin Spahr-Summers: Anthropic Engineer

TLDR:

The "flash of inspiration" for the MCP concept came from an internal project of Anthropic, LSP (Language Server Protocol). Inspired by LSP, the two engineers wondered whether they could make something similar to LSP to standardize "communication between AI applications and extensions."

The core design principle of MCP is that the concept of a tool is not just the tool itself, but also closely related to the client application, and thus closely related to the user. Through the operation of MCP, the user should have full control. Tools are controlled by the model, which means that they are only called by the model, rather than the user actively specifying the use of a tool (except for prompting purposes).

Open API and MCP are not mutually exclusive, but very complementary. The key is to choose the best tool for a specific task. If the goal is to achieve rich interactions between AI applications, MCP is more suitable; if you want the model to be able to easily read and interpret the API specification, open API will be a better choice.

AI-assisted coding is a great way to quickly build an MCP server. In the early stages of development, putting the MCP SDK code snippet into the LLM context window and letting LLM help build the server often yields very good results. Details can be further optimized later, which is a great way to quickly implement basic functions and iterate. At the same time, Anthropic's MCP team is very focused on simplifying the server build process so that LLM can get involved.

The future development direction of AI applications, ecosystems, and agents will tend to be Statefulness, which is also one of the most controversial topics within Anthropic's MCP core team. After many discussions and iterations, the conclusion is that although the future of Statefulness is optimistic, it cannot deviate from the existing paradigm and must find a balance between the concept of Statefulness and the complexity of actual operations.

Founder Park is building a developer community, inviting developers and entrepreneurs who are actively trying and testing new models and technologies to join. Please scan the QR code to fill in your product/project information in detail. After passing the review, the staff will add you to the group~

After joining the group, you will have the opportunity to get:

High concentration of mainstream model (such as DeepSeek, etc.) development and communication;

Resource docking, opportunities for direct communication and feedback with API, cloud vendors, and model vendors;

Founder Park will actively promote useful and interesting products/cases.

01 How did MCP come about?

swyx (host): First of all, what is MCP?

Justin: Model Context Protocol, or MCP for short, is basically a design we made to help AI applications expand themselves or integrate into the plugin ecosystem. Specifically, MCP provides a set of communication protocols that allow AI applications (we call them “clients”) and various external extensions (we call them “MCP servers”) to collaborate with each other. “Extensions” here can be plugins, tools, or other resources.

The purpose of MCP is to make it easy for everyone to introduce external services and functions, or retrieve more data when building AI applications, so that the application has richer capabilities. The concept of "client-server" is included in our naming, mainly to emphasize the interaction mode, but the essence is to make a universal interface that "makes AI applications easier to expand."

However, it is important to emphasize that MCP focuses on AI applications rather than the models themselves, which is a common misconception. In addition, we agree that MCP is like the USB-C port for AI applications, which is a universal interface that connects the entire ecosystem.

swyx (host): The client and server nature means it's bidirectional, just like the USB-C interface, which is interesting. Many people are trying to do related research and build open source projects. I feel that Anthropic is more active than other labs in attracting developers. I'm curious if this is influenced by external factors, or if you two came up with this idea in a room?

David : In fact, most of them were just the two of us coming up with them in the room. This is not part of the grand strategy. I joined Anthropic in July 2024, and I was mainly responsible for internal developer tools. During this period, I thought about how to get more employees to deeply integrate existing models. After all, these models are great and have better prospects. Naturally, I hope that everyone will use their own models more.

At work, with my background in development tools, I quickly became a little frustrated because Claude Desktop was limited and could not be expanded, and the IDE lacked the practical features of Claude Desktop, so I could only copy content back and forth between the two, which was troublesome. Over time. I realized that this was an MxN problem, that is, the problem of multiple applications and multiple integrations, and it was best solved with a protocol. At the time, I was still working on an internal project related to LSP (Language Server Protocol), and there was no progress. Combining these ideas, after a few weeks of thinking, I had the idea of building some kind of protocol: Can I make something like LSP? Standardize this "communication between AI applications and extensions."

So I found Justin and shared the idea, and luckily he was interested, so we started building it together.

It took about a month and a half to build the protocol and complete the first integration from the idea. Justin took on a lot of work in the first integration of Claude Desktop, and I did a lot of proof of concept in the IDE to show the application of the protocol in the IDE. Before the official release, you can find a lot of details by looking at the relevant code base. This is the general origin story of MCP.

Alessio (Host): What is the timeline? I know November 25th is the official release date. When did you start working on this project?

Justin : Around July, after David proposed the idea, I quickly got excited to work with him on building MCP. In the first few months, progress was slow because there was a lot of foundational work to build the communication protocol including the client, server, and SDK. But once things can communicate through the protocol, it becomes exciting and you can build all kinds of wonderful applications.

Later, we held a hackathon internally, and some colleagues used MCP to program a server that could control a 3D printer, as well as to implement extensions such as "memory functions." These prototypes were very popular, which made us believe that this idea had great potential.

swyx (moderator): Back to building MCP, we only see the final product, which is clearly inspired by LSP, which you both admit. I want to ask how much work was involved in building it? Was it mainly a lot of writing code, or was it a lot of design work? I think the design work accounted for a lot, such as the choice of JSON-RPC. How much did it draw on LSP? What other parts were more difficult?

Justin : We get a lot of inspiration from LSPs. David has a lot of experience with LSPs in terms of development tools, and I work mainly in product or infrastructure, so LSPs are new to me.

From the design principle point of view, LSP solves the M x N Problem mentioned by David. Before, different IDEs, editors and programming languages were independent of each other. You could not use JetBrains' excellent Java support in Vim, nor could you use Vim's excellent C language support in JetBrains. LSP enables all parties to "communicate" by creating a common language. LSP unifies the protocol so that "editor-language" only needs to be implemented once. Our goal is similar, but the scenario has changed to the connection between "AI application-extension".

In terms of details, we took the concepts of JSON-RPC and bidirectional communication and went in different directions. The principle of LSP focusing on functionality, thinking about and providing different primitives, rather than semantics, was also applied to MCP. After that, we spent a lot of time thinking about each primitive in MCP and why they were different, which was a lot of design work. At the beginning, we wanted to support three languages in TypeScript, Python, and Rust for Zed integration, build an SDK with clients and servers, create an internal experimental ecosystem, and stabilize the local MCP concept (involving launching subprocesses, etc.).

We took into account many criticisms of LSP and tried to improve it in MCP. For example, some of LSP's approaches to JSON-RPC were too complicated, so we made some more direct implementations. Because when building MCP, we chose to innovate in specific areas and borrow from mature models in other aspects , such as choosing JSON-RPC, which is not important, but focusing on innovations such as basic elements, which are very helpful to us.

swyx (host): I am interested in protocol design, and there is a lot to discuss here. You have mentioned the M x N Problem, which is the "Universal Box" problem that everyone who works on developer tools has encountered.

The fundamental problem and solution of infrastructure engineering is to connect a lot of things to N different things, and have a "one-size-fits-all" box. This is the problem with Uber, GraphQL, Temporal where I worked, and React. I'm curious if you guys solved the N times N problem at Facebook?

David: To some extent, yes. That's a good example. I deal with a lot of these problems in things like version control systems. It's about building a "one-size-fits-all" box to solve all the problems in one thing that everyone can read and write. You see these problems everywhere in the developer tooling space.

swyx (host): What’s interesting is that people who build “universal boxes” face the same problems, namely composability, remote and local issues, etc. Justin mentioned the problem of functional presentation. Some things are essentially the same, but they need to be presented differently in a clear concept.

02 Core concepts of MCP: tools, resources and tips are indispensable

swyx (moderator): I had this question when I was reading the MCP document. Why should there be a difference between these two things? Many people regard tool calls as a universal solution. In fact, different types of tool calls have different meanings. Sometimes they are resources, sometimes they are operations, and sometimes they are other purposes. I would like to know which concepts you classify as similar categories? Why do you emphasize their importance?

Justin : We think about each basic concept from the perspective of application developers. When developing applications, whether it is IDE, Claude Desktop or Agent interface, the functions that users want to obtain from the integration will be much clearer. At the same time, tool calls are necessary, and different functions must be distinguished.

So, the original core basic concepts of MCP were later expanded:

Tool : This is the core. It means adding tools directly to the model, allowing the model to decide when to call it. For application developers, this is similar to "function call", but it is initiated by the model.

Resource : Basically, it refers to data or background information that can be added to the model context and can be controlled by the application. For example, you may want the model to automatically search and find relevant resources and then bring them into the context; or you may want to set up an explicit user interface function in the application to allow users to make it part of the information sent to the LLM through a drop-down menu, paper clip menu, etc. These are all application scenarios for resources.

Prompt : A text or message that is intentionally initiated or replaced by the user. For example, if you are in an editor environment, it is like a slash command, or something like auto-completion, such as a macro that you want to insert directly.

Through MCP, we have our own insights into the different ways of presenting these contents, but it is ultimately up to the app developers to decide. As an app developer, it is very useful to get these concepts expressed in different ways, and we can determine the appropriate experience and form differentiation based on these. From the perspective of app developers, they don’t want their apps to be the same. When connecting to an open integrated ecosystem, unique practices are needed to create the best experience.

I think there are two aspects: the first aspect is that currently tool calls account for more than 95% of the integration. I hope that more clients will use resource calls and prompt calls. The first one to be implemented is the prompt function, which is very practical and can build a traceable MCP server. This is a user-driven interaction, and the user decides when to import information, which is better than waiting for the model to process. At the same time, I hope that more MCP servers will use prompts to show tool usage.

On the other hand, the resource part also has great potential. Imagine an MCP server that exposes resources such as documents and databases, and the client builds a complete index around these. Because the resources are rich in content, they are not model-driven, because you may have much more resource content than is actually available in the context window. I look forward to applications making better use of these basic concepts in the coming months to create richer experiences.

Alessio (moderator): With a hammer, you want to treat everything as a nail and use tools to solve all problems. For example, many people use it to query databases instead of resources. I am curious about the pros and cons of using tools and resources when there is an API interface (such as a database)? When should you use tools to do SQL queries? When should you use resources to process data?

Justin: The way we distinguish between tools and resources is that the tools are called by the model, and the model determines on its own whether to find the appropriate tool and apply it. If you want LLM to be able to run SQL queries, it is reasonable to set it as a tool.

Resources are more flexible to use, but the situation is complicated because many clients do not support them. Ideally, resources can be used to call things like database table schemas. Users can use this to tell the application relevant information to start a conversation, or let the AI application automatically find resources. As long as there is a need to list entities and read them, it makes sense to model them as resources. Resources are uniquely identified by URIs and can be considered universal converters, such as using an MCP server to interpret the URI entered by the user. Taking the Zed editor as an example, it has a prompt library that interacts with the MCP server to fill in prompts. Both parties need to agree on the URI and data format, which is a cool cross-example of resource application.

Back to the perspective of application developers, think about the needs and apply this idea to practice. For example, look at the existing application functions and see which functions can be separated and implemented by the MCP server if this approach is adopted. Basically, any IDE with an attachment menu can naturally be modeled as a resource. It's just that these implementations already exist.

swyx (moderator): Yes, when I saw the @ symbol in Claude Desktop, I immediately thought that this was the same functionality as the Cursor, and now other users can take advantage of this functionality. This is a great design goal because the functionality itself is already there and people can easily understand and use it. I showed the chart and you all agreed that it was valuable. I think it is very helpful and should be on the front page of the documentation. This is a good suggestion.

Justin : Would you like to submit a PR for this? We really like this suggestion.

swyx (host): OK, I’ll submit it.

As a developer relations person, I'm always trying to give people clear instructions, like listing the key points first and then spending two hours explaining them in detail. So, it's very helpful to have a picture to cover the core content. I appreciate your emphasis on prompts. In the early days of ChatGPT and Claude, many people tried to create prompt libraries and prompt manager libraries like the ones on GitHub, but in the end, none of them really caught on.

Indeed, more innovation is needed in this area. People expect prompts to be dynamic, and you provide this possibility. I really like the concept of multi-step prompts that you mentioned, which shows that sometimes in order to make the model work properly, you need to take a multi-step prompt approach or break some limitations. The prompt is not just a single dialogue input, sometimes it is a series of dialogue processes.

swyx (moderator): I think this is where there is a bit of a convergence of the concepts of resources and tools, because you're now talking about sometimes you want a certain degree of user control or application control, and other times you want the model to control it. So, are we just selecting a subset of tools now?

David: Yes, I think that's a valid concern. Ultimately, this is a core design principle of MCP, that the concept of a tool is really more than just the tool itself, it's tied to the client application, and therefore the user. Through the operation of MCP, the user should have full control. When we say that the tool is controlled by the model, we mean that it is only called by the model, not by the user actively specifying the use of a tool (except for prompting purposes, of course, but this should not be a regular user interface function).

But I think it is completely reasonable for the client application or user to decide to filter and optimize the content provided by the MCP server. For example, the client application can obtain the tool description from the MCP server and optimize the display. Under the MCP paradigm, the client application should have full control. In addition, we have a preliminary idea: to add functionality to the protocol to allow server developers to logically group basic elements such as tips, resources, and tools. These groups can be treated as different MCP servers, and then users can combine them according to their needs.

03 MCP and OpenAPI: Competition or complementarity?

swyx (host): I would like to talk about the comparison between MCP and Open API . After all, this is obviously one of the issues that everyone is very concerned about.

Justin /David: Fundamentally, the Open API specification is a very powerful tool that I use frequently when developing APIs and their clients. However, for the use case of large language models (LLMs), the Open API specification is too detailed and does not fully reflect higher-level, AI-specific concepts, such as the basic concepts of MCP we just mentioned and the mindset of application developers. Models can benefit more from tools, resources, tips, and other basic concepts designed specifically for them than just providing a REST API and letting them play freely.

On the other hand, when designing the MCP protocol, we deliberately made it stateful. This is because AI applications and interactions are inherently more inclined to statefulness. Although stateless will always have its place to a certain extent, as the number of interaction modes (such as video, audio, etc.) continues to increase, statefulness will become more and more popular, so statefulness protocols are also particularly useful.

In fact, open API and MCP are not mutually exclusive, but complementary. They are each powerful and very complementary. I think the key is to choose the tool that is best suited for a specific task. If the goal is to achieve rich interactions between AI applications, then MCP is a better fit; if you want the model to be able to easily read and interpret the API specification, then open API would be a better choice. Some people have already built a bridge between the two in the early days. There are some tools that can convert open API specifications into MCP form for publication, and vice versa, which is great.

Alessio (Host): I co-hosted a hackathon at AGI Studios. As a personal agent developer, I saw someone build a personal agent that can generate an MCP server: just enter the URL of the API specification, and it can generate the corresponding MCP server. What do you think of this phenomenon? Does it mean that most MCP servers are just adding a layer on top of the existing API without much unique design? Will it always be like this in the future, relying mainly on AI to connect to existing APIs, or will there be new and unprecedented MCP experiences?

Justin /David: I think both will exist. On the one hand, the need to bring data into an application through a connector will always be valuable. Although tool calls are more often used by default, other basic concepts may be more suitable for solving such problems in the future. Even if it is still a connector or adapter layer, it can increase its value by adapting different concepts.

On the other hand, there are indeed opportunities for some interesting use cases, building MCP servers that act as more than just adapters. For example, a memory MCP server could allow an LLM to remember information across different conversations; a sequential thinking MCP server could improve the reasoning capabilities of the model. Rather than integrating with external systems, these servers provide entirely new ways of thinking for the model.

Regardless, it is entirely possible to build servers using AI. Even if the functionality you need to implement is original and not adaptable to other APIs, the model can usually find a way to do it. Indeed, many MCP servers will be API wrappers, which is both reasonable and effective and can help you make a lot of progress. But we are still in the exploratory stage and are constantly exploring the possibilities that can be achieved.

As clients continue to improve their support for these basic concepts, rich experiences will emerge. For example, an MCP server that can "summarize the content of the Reddit section" is something that no one has built yet, but the protocol itself is completely capable of doing. I think when people's needs change from "I just want to connect the things I care about to LLM" to "I want a real workflow, a really richer experience, I want the model to be deeply interactive", you will see these innovative applications emerge. However, there is currently a "chicken or egg" problem between the capabilities supported by clients and the functions that server developers want to implement.

04 How to quickly build an MCP server: using AI programming

Alessio (moderator): I think there is another aspect of MCP that is less discussed, which is server building. What advice do you have for developers who want to start building MCP servers? As a server developer, how can you find the best balance between providing detailed descriptions (for the model to understand) and directly obtaining raw data (for the model to automatically process later)?

Justin /David: I have some suggestions. One of the great things about MCP is that it's very easy to build some simple functionality, and you can have it built in about half an hour, and it may not be perfect, but it's good enough to meet basic needs. The best way to get started is to choose your favorite programming language and use the SDK if it's available; build a tool that you want your model to interact with; build an MCP server; add the tool to the server; write a simple description of the tool; connect it to your favorite application via standard input and output protocols; and then see how the model can use it.

For developers, being able to quickly see the model working on something they care about is very attractive and can inspire them to think deeply about what other tools, resources, and prompts are needed, as well as how to evaluate performance and optimize prompts. This is a process that can be explored in depth, but starting with simple things and seeing how the model interacts with what you care about is fun in itself. MCP makes development fun and allows models to work quickly.

I also tend to take advantage of AI- assisted coding . Early in development, we found that we could drop code snippets from the MCP SDK into the context window of LLM and let LLM help build the server. The results were often very good, and the details could be further optimized later. This is a great way to quickly implement basic functions and iterate. From the beginning, we paid great attention to simplifying the server building process so that LLM could participate. In the past few years, it may only take 100 to 200 lines of code to start an MCP server, which is really simple. If there is no ready-made SDK, you can also provide the relevant specifications or other SDKs to the model to help you build some functions. It is also usually very direct to make tool calls in your favorite language.

Alessio (moderator): I find that server builders have a lot of control over the format and content of the data that is ultimately returned. For example, in the case of tool calls, like Google Maps, it is up to the builder to decide which attributes are returned. If a certain attribute is missing, the user cannot override or modify it. This is similar to my dissatisfaction with some SDKs: when people build API -wrapped SDKs, if they leave out parameters that the API adds, I can't use these new features. What do you think about this issue? How much intervention should users have, or is it entirely up to the server designer?

Justin /David: Regarding the Google Maps example, we may have some responsibility because it is a reference server we released. In general, at least for now, we intentionally design the results of tool calls not to be structured JSON data, nor to match specific patterns, but to be presented in the form of messages such as text and images that can be directly input into LLM. That is, we tend to return a lot of data and trust LLM to filter and extract the information it cares about. We have put a lot of effort in this regard, aiming to give the model flexibility to get the information it needs, because this is its strength. We think about how to fully utilize the potential of LLM without being overly restrictive or prescriptive, so as to avoid becoming difficult to extend as the model improves. Therefore, in the example server, the ideal state is that all result types can be passed directly from the called API intact, and the API automatically passes the data.

Alessio (host): It is indeed a difficult decision to make where to draw the line.

David: I might want to emphasize a little bit the role of AI here. It's not surprising that many of the sample servers were written by Claude. Currently, people tend to be accustomed to using traditional software engineering methods to deal with problems, but in fact we need to relearn how to build systems for LLM and trust their capabilities. With LLM making significant progress every year, it is now a wise choice to hand over the task of processing data to models that are good at this. This means that we may need to put aside the traditional software engineering practices of the past two, three, or even forty years.

Looking at MCP from another perspective, the speed of AI development is amazing, which is both exciting and a little worrying. For the next wave of model capability improvement, the biggest bottleneck may be the ability to interact with the outside world , such as reading external data sources and taking statefulness actions. When working at Anthropic, we attach great importance to safe interactions and take corresponding control and calibration measures. As AI develops, people will expect models to have these capabilities, and connecting models to the outside world is the key to improving AI productivity. MCP is also our bet on the future direction and its importance.

Alessio (moderator): That's right, I think any API attribute with the word "formatted" should be removed. We should get raw data from all interfaces. Why does it need to be pre-formatted? The model is definitely smart enough to format information such as addresses on its own. So this part should be decided by the end user.

05 How to make MCP better call more tools?

swyx (moderator): I would like to ask another question, how many related functions can an MCP implementation support? This involves the issue of breadth and depth, and is also directly related to the MCP nesting we just discussed.

When Claude launched the first million token context example in April 2024, he stated that it would support 250 tools, but in many practical cases, the model cannot really use so many tools effectively. In a sense, this is a breadth problem because there is no tool calling tool, only models and a flat tool hierarchy, which makes it easy for tools to be confused. When the functions of tools are similar, the model may call the wrong tool, resulting in suboptimal results. Do you have any recommendations for the maximum number of MCP servers enabled at any given time?

Justin: Frankly speaking, there is no absolute answer to this question. On the one hand, it depends on the model you use, and on the other hand, it depends on whether the naming and description of the tools are clear enough for the model to understand accurately and avoid confusion. The ideal state is to provide all information to the LLM and let it handle everything completely. This is also the future blueprint envisioned by MCP. But in real applications, client applications (that is, AI applications) may need to do some supplementary work, such as filtering tool sets, or using a small and fast LLM to filter out the most relevant tools before passing them to large models. In addition, filtering can also be performed by setting some MCP servers as agents for other MCP servers.

At least for Claude, it's safe to say that hundreds of tools are supported. It's unclear what the situation will be for other models. This should get better over time, so be careful about restrictions so as not to hinder this growth. The number of tools that can be supported will depend a lot on the overlap in descriptions. If the servers have different capabilities and the tool names and descriptions are clear and unique, then more tools can be supported than if there are servers with similar capabilities (such as connecting to both GitLab and GitHub servers).

This also depends on the type of AI application you are building. When building highly intelligent applications, you might want to reduce the amount of questions you ask the user and the configurability of the interface; but when building something like an IDE or a chat app, it makes perfect sense to allow the user to choose the feature set they want at different times, rather than enabling all features all the time.

swyx (moderator): Finally, let's focus on the Sequential Thinking MCP Server . It has branching capabilities and the ability to provide "more writing space", which are very interesting. In addition, Anthropic published a new engineering blog last week introducing their Thinking Tool, and the community has some doubts about whether there is overlap between the Sequential Thinking Server and this Thinking Tool. In fact, this is just different teams doing similar things in different ways. After all, there are many different ways to implement it.

Justin/David: As far as I know, the Sequential Thinking Server has no direct common roots with Anthropic's thinking tools. But it does reflect a general phenomenon: there are many different strategies to make LLM think more thoughtfully, reduce illusions, or achieve other goals, which can show the effect more comprehensively and reliably from multiple dimensions. This is the power of MCP - you can build different servers, or set up different products or tools in the same server to achieve different functions, and let LLM apply specific thinking patterns to achieve different results.

Therefore, there is no ideal, prescribed way of thinking about LLM.

swyx (host): I think different applications will have different uses, and MCP allows you to achieve this diversity, right?

Justin/David: Exactly. I think the approach taken by some of the MCP servers is to fill in the gaps in what the models themselves were capable of at the time. Model training, preparation, and research take a lot of time to gradually improve their capabilities. Take the sequential thinking server, for example, it may look simple, but it is not, and it can be built in just a few days. However, if you want to implement this complex thinking function directly inside the model, it is definitely not something that can be done in a few days.

For example, if the model I use is not very reliable, or someone feels that the results generated by the current model are not reliable overall, I can imagine building an MCP server that lets the model try to generate three results for a query and then picks the best one. With MCP, this recursive and composable LLM interaction can be achieved.

06 What is the difference between complex MCP and Agent?

Alessio (moderator): I'd like to ask about composability next. What do you think about the concept of introducing one MCP into another MCP? Are there any plans for this? For example, if I want to build an MCP for summarizing the content of Reddit sections, this may require calling an MCP corresponding to the Reddit API , and an MCP that provides summarization functionality. So, how can I build such a "super MCP"?

Justin /David: This is a very interesting topic and can be viewed from two aspects.

On the one hand, consider building a component like a summary function . Although it may call LLM, we hope that it can remain independent of the specific model. This involves the two-way communication function of MCP. Take Cursor as an example, it manages the interaction loop with LLM. Server developers can use Cursor to request the client (that is, the application where the user is located) to perform certain tasks, such as asking the client to summarize using the model currently selected by the user and return the results. In this way, the choice of summary model depends on Cursor, and developers do not need to introduce additional SDKs or API keys on the server side, thus achieving a construction that is independent of specific models.

On the other hand, it is entirely possible to build more complex systems with MCP . You can imagine an MCP server that powers services like Cursor or Windsurf, and that itself acts as an MCP client, calling other MCP servers to create a richer experience. This is a recursive feature, and it is also reflected in aspects such as canonical authorization. You can string together these applications that are both servers and clients, and even use MCP servers to build DAGs (Directed Acyclic Graphs) to implement complex interaction flows. Smart MCP servers can even leverage the capabilities of the entire MCP server ecosystem. People have already experimented with this. If you also consider features such as automatic selection and installation, there are many possibilities that can be realized.

Currently, our SDK still needs to add more details to make it easier for developers to build applications that are both clients and recursive MCP servers, or to more conveniently reuse the behavior of multiple MCP servers. These are things to be improved in the future, but they can already show some application scenarios that are currently feasible but not widely adopted.

swyx (host): This sounds very exciting, and I believe many people will get a lot of ideas and inspiration from it. So, can this MCP, which is both a server and a client, be considered an agent? In a sense, an agent is when you send a request, and it will perform some underlying operations that you may not be fully aware of. There is a layer of abstraction between you and the final source of raw data. Do you have any unique insights into agents?

Justin /David: I think it is indeed possible to build an agent through MCP. What needs to be distinguished here is the difference between an MCP server plus a client that is just an agent and a real agent. For example, within an MCP server, you can use the sample loop provided by the client to enrich the experience and let the model call the tool to build a real agent. This construction method is relatively straightforward.

In terms of the relationship between MCP and Agent, we have several different ways of thinking:

First, MCP may be a good way to express the capabilities of an agent, but perhaps it is currently lacking some features or functions that can enhance the user interaction experience, which should be considered for inclusion in the MCP protocol.

Secondly, MCP can be used as a basic communication layer for building agents or combining different agents. Of course, there are other possibilities, such as believing that MCP should focus more on integration at the AI application level rather than focusing too much on the concept of agent itself. This is still an issue under discussion, and each direction has its trade-offs. Going back to the previous analogy of the "universal box", when designing protocols and managing ecosystems, we need to be particularly careful to avoid overly complex functions and not let the protocol try to be all-encompassing, otherwise it may cause it to perform poorly in all aspects. The key question is to what extent the agent can naturally integrate into the existing model and paradigm framework, or to what extent it should exist as an independent entity. This is still an unresolved issue.

swyx (moderator): I think that when two-way communication is achieved, the client and server can be combined into one, and the work can be delegated to other MCP servers, it becomes more like an Agent. I appreciate that you always keep simplicity in mind and don't try to solve all problems.

07 Next Steps for MCP : How to Make the Protocol More Reliable?

swyx (moderator): The recent update on moving from stateful servers to stateless servers has aroused everyone's interest. What was the reason behind choosing Server Sent Events (SSE) as the publishing protocol and transport method, and supporting a pluggable transport layer? Was it influenced by Jared Palmer's tweet, or was it already in the works?

Justin / David : Not really. We publicly discussed the difficulties related to Statefulness and Stateless on GitHub a few months ago and have been weighing them. We believe that the future development direction of AI applications, ecosystems, and agents tends to be Statefulness . This is one of the most controversial topics within the MCP core team and has been discussed and iterated many times. The final conclusion is that although we are optimistic about the future of Statefulness, we cannot deviate from the existing paradigm and must find a balance between the concept of Statefulness and the complexity of actual operations. Because if the MCP server is required to maintain a long-term continuous connection, the difficulty of deployment and operation will be very large. The original SSE transmission design, its basic idea is that after you deploy an MCP server, the client can connect in and maintain a nearly indefinite connection. This is a very high requirement for anyone who needs to operate at a large scale, and it is not an ideal deployment or operation model.

Therefore, we think about how to balance the importance of Statefulness with the ease of operation and maintenance. The streaming HTTP transmission method we launched, including SSE, is designed in a step-by-step manner. The server can be an ordinary HTTP server that obtains results through HTTP POST requests. Then the functions can be gradually enhanced, such as supporting streaming of results, and even allowing the server to actively make requests to the client. As long as the server and client support Session Resumption (session recovery, that is, reconnecting and continuing transmission after disconnection), it can achieve convenient expansion while taking into account Statefulness interaction, and can better cope with network instability and other conditions.

Alessio (Moderator): Yes, and also the session ID. What are your plans for future authentication? Currently, for some MCPs, I just paste my API key into the command line. Where do you think this is going in the future? Will there be something like an MCP-specific config file to manage authentication information?

Justin /David: We have already included authentication specifications in the next draft revision of the protocol. Right now the focus is on user-to-server authentication, using OAuth 2.1 or a modern subset of it. This works well and people are building on it. This solves a lot of problems because you don't want users to just paste API keys around, especially considering that most servers in the future will be remote servers and need to authenticate securely with each other.

In the local environment, since the authorization information is defined at the transport layer, data frame encapsulation (setting the request header) is required, and standard input and output (stdin/stdout) cannot be directly implemented. However, when running programs that use standard input and output locally, the operation is very flexible, and you can even open a browser to handle the authorization process. As for whether to use HTTP for authorization locally, we have not yet fully determined it internally. Justin tends to support it, but I personally disagree, and there is controversy.

Regarding authorization design, I think that like other parts of the protocol, we strive to be quite streamlined, solve actual pain points, simplify functions first, and then gradually expand according to actual needs and pain points to avoid over-design. Designing protocols requires great caution, because once you make a mistake, it is basically irreversible, otherwise it will break backward compatibility. Therefore, we only accept or add those that have been fully considered and verified, and let the community make temporary attempts through the extension mechanism until there is a broader consensus that certain functions should indeed be added to the core protocol and we have the ability to continue to provide support in the future. This will be easier and more robust.

Taking authorization and API keys as an example, we did a lot of brainstorming. The current authorization method (a subset of OAuth 2.1) is already able to meet the use cases of API keys. An MCP server can act as an OAuth authorization server and add related functions, but if you visit its "/authorize" web page, it may just provide a text box for you to enter your API key. Although this may not be the most ideal approach, it does fit the existing pattern and is feasible at the moment. We are worried that if we add too many other options, both the client and the server will need to consider and implement more situations, which will increase complexity.

Alessio (host): Have you thought about the concept of scopes? Yesterday we did an episode with Dharmesh Shah, the founder of Agent.ai . He gave an example about email: he had all his emails and wanted to have more fine-grained scope control, such as "you can only access these types of emails" or "you can only access emails sent to this person." Today, most scopes are usually designed based on REST APIs , that is, what specific endpoints you can access. Do you think it will be possible for future models to understand and utilize the scope layer to dynamically limit the data transmitted?

Justin /David: We recognize that there is a potential need for scopes and have discussed it, but adding it to the protocol requires great caution . Our criteria is to first find a real problem that cannot be solved by the current implementation, then prototype it based on the scalability of the MCP structure, and prove that it can bring a good user experience before considering it for formal inclusion in the protocol. The situation of authentication is different, it is designed more from the top-down.

Every time we hear scopes described, we think they make sense, but we need specific end-to-end user cases to clarify the shortcomings of the current implementation so that we can further discuss it. Considering the design concept of composability and logical grouping, we generally recommend that MCP servers be designed to be relatively small, and a large number of different functions are best implemented by independent, discrete servers, and then combined at the application level. Some people also raise objections and do not agree to let a single server take on the authorization task of multiple different services. They believe that these services themselves should correspond to independent servers and then be combined at the application level.

08 Security issues of MCP server distribution

Alessio (moderator): I think one of the great things about MCP is that it is language agnostic. As far as I know, Anthropic doesn't have an official Ruby SDK, and neither does OpenAI. Although developers like Alex Rudall have done a great job building these toolkits, with MCP, we no longer need to adapt SDKs for various programming languages separately, we just need to create a standard interface recognized by Anthropic, which is great.

swyx (host): Regarding the MCP Registry, there are currently five or six different registries, and the official registry that was originally announced has ceased operations. The service model of the registry, such as providing downloads, likes, reviews, and trust mechanisms, is easily reminiscent of traditional software package repositories (such as npm or PyPI), but this makes me feel unreliable. Because even with social proof, the next update may expose a previously trusted software package to security threats. This kind of abuse of the trust system feels like the establishment of a trust system is being damaged by the trust system itself. Therefore, I prefer to encourage people to use MCP Inspector, because it only needs to view communication traffic, and many security issues may be discovered and resolved in this way. How do you view the security issues and supply chain risks of the registry?

Justin /David: Yes, you are absolutely right. This is indeed a typical supply chain security problem that all registries may face. There are different solutions to this problem in the industry. For example, you can adopt a model similar to Apple's App Store, conduct strict audits on software, and set up automated systems and manual review teams to complete this work. This is indeed a way to solve this kind of problem, and it is feasible in certain specific scenarios. But I think this model may not be very applicable in the open source ecosystem, because the open source ecosystem is usually decentralized or community-driven, such as the MCP registry, the npm package manager, and PyPI (Python Package Index).

Swyx (moderator): These warehouses are essentially facing the problem of supply chain attacks. Some core servers that have been released in the official code base, especially special servers such as memory servers and reasoning/thinking servers, seem to be more than just simply encapsulating existing APIs, and may be more convenient to use than directly operating the API.

Take the memory server as an example. Although there are some startups focusing on memory functions in the market, using this MCP memory server, the amount of code is only about 200 lines, which is very simple. Of course, if more complex expansion is required, a more mature solution may be required. But if you just want to quickly introduce memory functions, it provides a very good implementation, and you may not need to rely on the products of those companies. For these special servers that are not API encapsulated, do you have any special stories to share?

Justin /David: There's not really a lot of specific stories. A lot of these servers came out of the hackathons we talked about earlier. At the time, people were very interested in the idea of MCP, and some engineers within Anthropic who wanted to implement memory features or try out related concepts were able to use MCP to quickly build prototypes that were previously difficult to achieve. You no longer need to be an end-to-end expert in a certain field, and you don't need specific resources or private code bases to add features like memory to your application or service. A lot of servers were born that way. At the same time, we were also considering how wide a range of functional possibilities we wanted to show at the time of release.

swyx (host): I couldn't agree more. I think that's partly what made your release successful, providing a rich set of examples that people can just copy and paste and build on . I also want to highlight the file system MCP server, which provides the ability to edit files. I remember Eric showing off his great bench project in the podcast before, and the community was very interested in the open source file editing tools. There are some related libraries and solutions on the market that treat this file editing ability as core intellectual property, and you just open source this feature, which is really cool.

Justin /David: The file system server is one of my personal favorites. It solves a practical limitation I had at the time, I had a hobby game project that I really wanted to connect to the cloud and the "artifacts" that David mentioned earlier. Being able to have the cloud interact with your local machine is huge and I love it.

This is a classic example of a server that was born out of the frustration we had creating MCP and the need for this kind of functionality. Justin was particularly struck by the clear and direct path from the problems we encountered to developing MCP and this server. As such, it holds a special place in our hearts and can be considered a kind of spiritual origin point for the protocol.

09 MCP is now a large-scale project involving multiple companies

swyx (moderator): There's been a lot of lively discussion about MCP. If people want to participate in these debates and discussions, what channels should they use? Directly on the canonical code repository talk page?

Justin /David: It's relatively easy to express your opinions on the Internet, but it takes effort to actually put them into practice. Jason and I are both traditional supporters of open source ideas. We believe that practical contributions are crucial in open source projects. If you demonstrate your results through practical work, with concrete examples, and put in the effort to expand the functions you want in the software development kit (SDK), your ideas are more likely to be adopted by the project. If you just stay at the level of expressing your opinions, your voice may be ignored. We certainly value various discussions, but considering the limited time and energy, we will give priority to those who have put in more practical work.

The number of MCP-related discussions and notifications is very large, and we need to find a more scalable architecture to interact with the community to ensure that the discussions are valuable and productive. Running a successful open source project sometimes requires making difficult decisions that may not please some people. As the maintainer and manager of the project, you must clarify the actual vision of the project and firmly move in the established direction, even if someone disagrees, because there may always be a project that better suits their ideas.

Take MCP as an example. It is just one of many solutions to common domain-related problems. If you don't agree with the direction chosen by the core maintainers, the advantage of open source is that you have more options. You can choose to "fork" the project. We do expect community feedback and work hard to make the feedback mechanism more extensible, but at the same time we will make decisions based on our intuition that we think are right. This may cause a lot of controversy in open source discussions, but this is sometimes the nature of such open source projects, especially those in rapidly evolving fields.

swyx (moderator): Fortunately, you all seem to be no strangers to making difficult decisions. Facebook's open source projects provide a lot of experience to learn from, and even if you are not directly involved, you can understand the practices of the participants. I am deeply involved in the React ecosystem and have set up a working group before, and the discussion process is public. Every member of the working group has a voice, and they are all people with actual work and important contributions. This model is very helpful for a period of time. Regarding GraphQL, its development trajectory and early popularity are somewhat similar to the current MCP. I have experienced the development process of GraphQL, and Facebook eventually donated it to the open source foundation.

This raises the question: should MCP do the same? This question is not a simple "yes" or "no", there are trade-offs. Most people are currently happy with Anthropic's work on MCP, after all, you created it and manage it. But when the project grows to a certain scale, it may reach a bottleneck and realize that this is a company-led project. People will eventually expect true open standards to be driven by non-profit organizations, with multiple stakeholders and good governance processes, such as those managed by the Linux Foundation or the Apache Foundation. I know it may be too early to discuss this issue now, but I would like to hear your thoughts on this?

Justin /David: Governance in the open source field is indeed an interesting and complex issue. On the one hand, we are fully committed to making MCP an open standard, open protocol, and open project, and everyone who is interested is welcome to participate. The current progress is good. For example, many ideas for streaming HTTP come from different companies such as Shopify, and this cross-company cooperation is very effective. But we do worry that official standardization, especially through traditional standardization bodies or related processes, may significantly slow down the development of projects in a fast-moving field like AI. Therefore, we need to find a balance: how to keep the existing parties actively involved and contributing while addressing their concerns or problems with the governance model and finding the right future direction without going through repeated organizational changes.

We really want MCP to be a truly open project. Although it was initiated by Anthropic and David and I both work at Anthropic, we don't want it to be seen as just "Anthropic's protocol." We hope that various AI labs and companies can participate or use it. This is very challenging and requires efforts to balance the interests of all parties and avoid falling into the dilemma of "committee decisions causing the project to stagnate." There are many successful management models in the open source field, and I think most of the subtleties revolve around corporate sponsorship and corporate voice in the decision-making process. We will deal with these related issues properly, and we absolutely hope that MCP will eventually become a true community project.

In fact, many non-Anthropic employees already have submission and management rights to MCP code. For example, the Pydantic team has submission rights to the Python SDK; companies such as Block have made many contributions to the specification; SDKs for languages such as Java, C#, and Kotlin are completed by different companies such as Microsoft, JetBrains, and Spring AI, and these teams have full management rights. So, if you look closely, it is actually a large project jointly participated by multiple companies, and many people have contributed to it. It is not just the two of us who have submission rights and related rights to the project.

Alessio (moderator): Do you have any particular "wish list" for future MCP servers or clients? Are there any features you particularly want people to build that are not yet implemented?

Justin /David: I'd like to see more clients that support sampling. I'd also like to see someone build some specialized servers, like one that summarizes the content of a Reddit discussion thread, or one that gets the last week's activity for EVE Online. I'd especially like the former to be model-agnostic - not that I don't want to use models other than Claude (because Claude is the best at the moment), but I'd like to see a client framework that supports sampling.

More broadly, it would be great if more clients supported the full MCP specification. We designed it with incremental adoption in mind, and it would be great if these well-thought-out basic concepts could become widely used. Thinking back to my initial motivation for working on MCP, and my excitement about file system servers -

I'm a game developer in my spare time, so I'd love to see an MCP client or server integrated with the Godot engine (which I use to develop games at the time). This would make it easy to integrate AI into my games, or to have Claude run and test my games. For example, let Claude play the Pokémon games. Now there's a foundation for this idea. What about going a step further and having Claude build 3D models for you using Blender from now on?

swyx (host): Honestly, even things like shader code are theoretically possible. It's really beyond my area of expertise. But it's amazing what developers can do when you give them the support and tools. We're planning a "Claude plays Pokémon" hackathon with David Hersh. I hadn't originally planned to incorporate MCP into it, but now it seems like it might be worth considering.