"The "USB-C Moment" in AI Evolution, in November 2024, the MCP protocol released by Anthropic is causing an earthquake in Silicon Valley. This open standard, dubbed the "USB-C of the AI world", not only restructures the way large models connect to the physical world, but also hides the key to breaking the AI monopoly dilemma and restructuring the production relations of digital civilization. While we are still debating the parameter scale of GPT-5, MCP has quietly paved the way for the decentralized path to the AGI era......

Bruce: I've been studying the Model Context Protocol (MCP) recently. This is the second thing in the AI field that has excited me very much after ChatGPT, because it has the potential to solve three problems I've been thinking about for years:

How can non-scientists and geniuses, ordinary people, participate in the AI industry and earn income?

What are the win-win combinations of AI and Ethereum?

How to achieve AI d/acc? Avoid the monopoly and censorship of centralized large companies, and the destruction of humanity by AGI?

01 What is MCP?

MCP is an open standard framework that can simplify the integration of LLMs with external data sources and tools. If we compare LLMs to the Windows operating system, then Cursor and other applications are the keyboard and hardware, and MCP is the USB interface, supporting the flexible insertion of external data and tools, and then users can read and use these external data and tools.

MCP provides three capabilities to extend LLMs:

Resources (knowledge expansion)

Tools (execute functions, call external systems)

Prompts (pre-written prompt templates)

MCP can be developed and hosted by anyone, provided as a Server, and can be taken offline and stopped at any time.

02 Why do we need MCP

Currently, LLMs use as much data as possible for a large amount of computation and generate a large number of parameters, integrating knowledge into the model to achieve corresponding knowledge output in dialogue. But there are quite a few problems:

The large amount of data and computation requires a lot of time and hardware, and the knowledge used for training is often outdated.

Large parameter models are difficult to deploy and use on local devices, but in fact, users in most scenarios may not need all the information to complete their needs.

Some models use web crawlers to read external information for computation to achieve timeliness, but due to the limitations of web crawlers and the quality of external data, they may produce more misleading content.

Since AI has not brought good benefits to creators, many websites and content are starting to implement anti-AI measures, generating a lot of junk information, which will lead to a gradual decline in the quality of LLMs.

LLMs are difficult to extend to various external functions and operations, such as accurately calling the GitHub API to perform some operations, they will generate code based on possibly outdated documentation, but cannot ensure accurate execution.

03 The architectural evolution of Fat LLM and Thin LLM + MCP

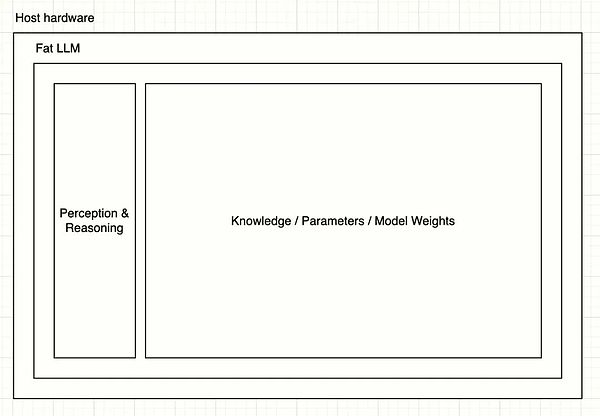

We can view the current large-scale models as Fat LLMs, and their architecture can be represented by the following simple diagram:

After the user inputs information, it is decomposed and reasoned through the Perception & Reasoning layer, and then the large number of parameters are called to generate the result.

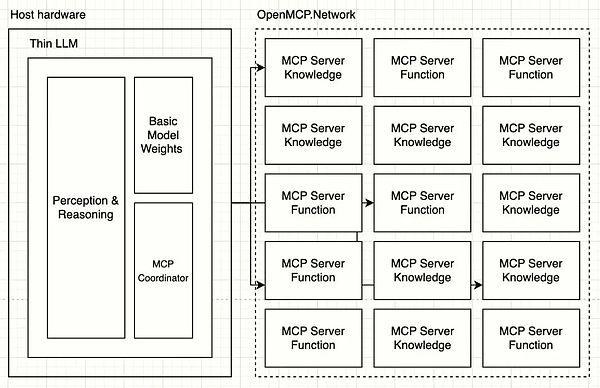

Based on MCP, LLMs may focus on language parsing itself, separating out knowledge and capabilities, becoming Thin LLMs:

In the architecture of Thin LLMs, the Perception & Reasoning layer will focus on how to parse the full range of human physical environment information into tokens, including but not limited to: speech, tone, smell, images, text, gravity, temperature, etc., and then through the MCP Coordinator to orchestrate and coordinate hundreds of MCP Servers to complete the task. The training cost and speed of Thin LLMs will be greatly improved, and the deployment requirements for devices will be very low.

04 How MCP solves the three major problems

How can ordinary people participate in the AI industry?

Any person with unique talent can create their own MCP Server to provide services to LLMs. For example, a bird enthusiast can provide their bird notes through MCP to external services. When someone uses an LLM to search for bird-related information, they will call the current bird notes MCP service. Creators will also receive revenue sharing as a result.

This is a more precise and automated creator economy cycle, with more standardized service content, and the number of calls and output tokens can be precisely counted. LLM providers can even simultaneously call multiple bird notes MCP Servers to allow users to choose and rate to determine who has better quality and get higher matching weights.

The win-win combination of AI and Ethereum

a. We can build an OpenMCP.Network creator incentive network based on Ethereum. MCP Servers need to host and provide stable services, users pay the LLM provider, and the LLM provider will distribute the actual incentives through the network to the called MCP Servers to maintain the sustainability and stability of the entire network, stimulating MCP creators to continue creating and providing high-quality content. This network will require the use of smart contracts to automate, transparent, trustworthy and censorship-resistant incentives. Signature, permission verification, and privacy protection during operation can be implemented using Ethereum wallets, ZK, and other technologies.

b. Develop Ethereum chain-based operation-related MCP Servers, such as AA wallet call services, so that users can make wallet payments through language in LLMs without exposing relevant private keys and permissions to the LLM.

c. There are also various developer tools to further simplify Ethereum smart contract development and code generation.

Achieving AI decentralization

a. MCP Servers will decentralize AI knowledge and capabilities, and anyone can create and host MCP Servers, register on platforms like OpenMCP.Network, and receive incentives based on calls. No single company can control all MCP Servers. If an LLM provider gives unfair incentives to MCP Servers, creators will support blocking that company, and users will not get quality results and will switch to other LLM providers to achieve fairer competition.

b. Creators can implement fine-grained permission control on their own MCP Servers to protect privacy and copyrights. Thin LLM providers should provide reasonable incentives to get creators to contribute high-quality MCP Servers.

c. The capability gap between Thin LLMs will gradually be leveled out, because human language has an upper limit of traversal, and the evolution is also very slow. LLM providers will need to focus their attention and funds on high-quality MCP Servers, rather than repeatedly using more GPUs to refine.

d. The capabilities of AGI will be decentralized and downgraded, with LLMs only serving as language processing and user interaction, and specific capabilities distributed across various MCP Servers. AGI will not threaten humanity, because after shutting down MCP Servers, it can only perform basic language dialogues.

05 Overall Review

The architectural evolution of LLM + MCP Servers is essentially the decentralization of AI capabilities, reducing the risk of AGI destroying humanity.

The usage of LLMs allows the number of calls to MCP Servers and the input/output to be statistically and automatically counted at the token level, laying the foundation for building an AI creator economy system.

A good economic system can drive creators to actively contribute high-quality MCP Servers, thereby driving the development of all of humanity, achieving a positive flywheel. Creators no longer resist AI, and AI will also provide more jobs and income, distributing the profits of monopolistic commercial companies like OpenAI more reasonably.

This economic system, combined with its characteristics and the needs of creators, is very suitable for implementation based on Ethereum.

06 Future Outlook: The Next Script Evolution

MCP or MCP-like protocols will emerge one after another, and several major companies will begin to compete to define the standard.

MCP Based LLMs will appear, focusing on parsing and processing human language in small models, with MCP Coordinators to access the MCP network. LLMs will support automatic discovery and scheduling of MCP Servers, without the need for complex manual configuration.

MCP Network service providers will emerge, each with their own economic incentive system, and MCP creators can register and host their Servers to receive income.

If the economic incentive system of the MCP Network is built using Ethereum, based on smart contracts, then the transactions on the Ethereum network are conservatively estimated to increase by about 150 times (based on a very conservative estimate of 100 million MCP Server calls per day, with the current 12s per block including 100 txs).