Guest author of Tencent Technology's "AI Future Compass": Hao Boyang

Less than a month later, DeepSeek has once again shaken the global AI community.

In December last year, the launch of DeepSeek-V3 by DeepSeek caused a huge stir in the global AI field. It achieved performance on par with GPT-4o and Claude Sonnet 3.5 at an extremely low training cost, shocking the industry. Tencent Technology has previously conducted an in-depth analysis of this model, using the simplest and most straightforward methods to interpret the technical background that allows it to simultaneously achieve low cost and high efficiency.

Unlike the previous time, the new model DeepSeek-R1 released this time not only has a low cost, but also has a significant technological breakthrough, and it is also an open-source model.

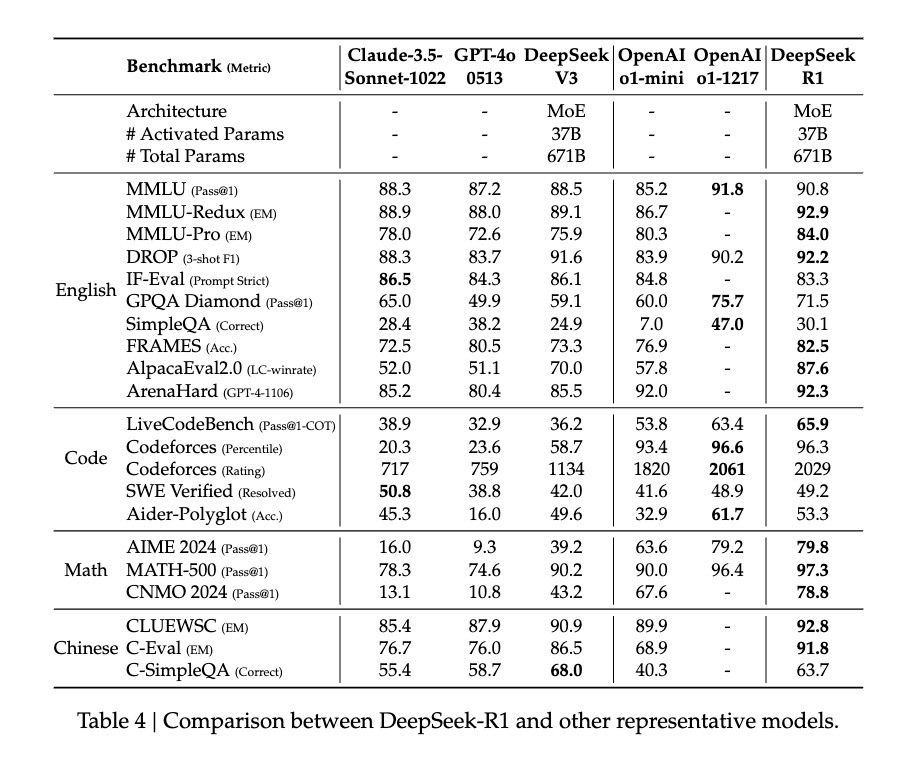

This new model continues to have the advantage of high cost-effectiveness, achieving GPT-o1 level performance with only one-tenth of the cost.

Therefore, many industry insiders have even shouted the slogan "DeepSeek to succeed OpenAI", and more people have focused on the breakthroughs in its training methods.

For example, former Meta AI employee and well-known AI paper Twitter author Elvis emphasized that the paper on DeepSeek-R1 is a treasure trove, as it explores various methods to improve the reasoning capabilities of large language models and has discovered more explicit emergent properties.

Another KOL in the AI circle, Yuchen Jin, believes that the discovery in the DeepSeek-R1 paper, that the model uses pure RL methods to guide its autonomous learning and reflective reasoning, is of great significance.

Jim Fan, the project manager of NVIDIA's GEAR Lab, also mentioned on Twitter that DeepSeek-R1 uses the real rewards calculated by hardcoded rules, instead of using any RL-prone reward model. This has led to the emergence of self-reflection and exploratory behavior in the model.

Since all these extremely important findings have been fully open-sourced by DeepSeek-R1, Jim Fan even believes that this is something OpenAI should have done.

So the question is, what do they mean by training the model using pure RL methods? And what can the model's "Aha moment" prove about the emergence of AI's capabilities? What we really want to know is, what does this important innovation by DeepSeek-R1 mean for the future development of the AI field?

Using the simplest recipe, returning to the purest reinforcement learning

After the release of o1, reasoning reinforcement has become the focus of the industry.

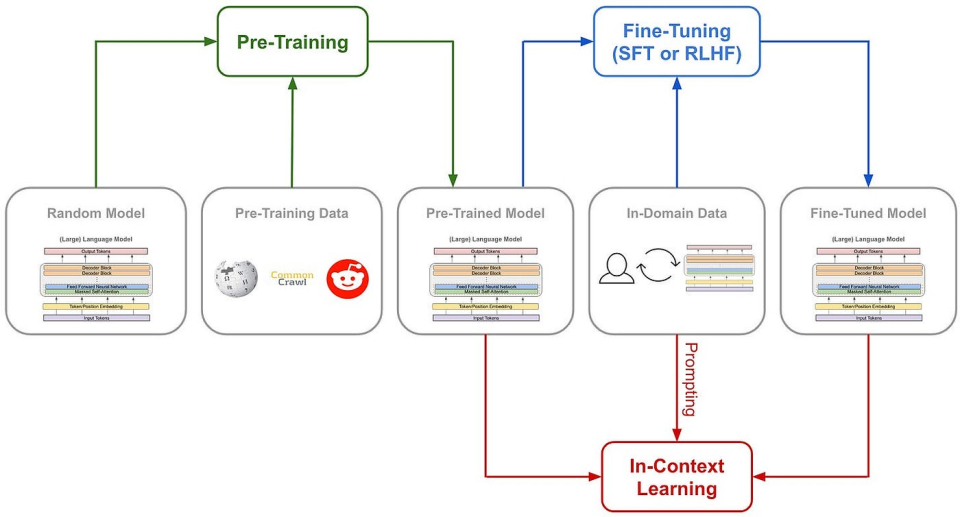

Generally, a model will try a fixed training method to improve its reasoning ability during the training process.

However, the DeepSeek team experimented with three completely different technical paths in the R1 training process: direct reinforcement learning training (R1-Zero), multi-stage progressive training (R1), and model distillation, all of which were successful. The multi-stage progressive training method and model distillation both contain many innovative elements and have an important impact on the industry.

The most exciting one is the direct reinforcement learning path. Because DeepSeek-R1 is the first model to prove the effectiveness of this method.

Let's first understand the traditional methods for training the reasoning ability of AI: Generally, it is through adding a large number of thought chain (COT) examples in SFT (supervised fine-tuning), using case-based and complex neural network reward models such as process reward models (PRM), to make the model learn to think using thought chains.

They may even add Monte Carlo tree search (MCTS) to let the model search for the best possible outcome among multiple possibilities.

(Traditional model training path)

But DeepSeek-R1 Zero has chosen an unprecedented path of "pure" reinforcement learning. It completely abandons the pre-set thought chain templates (Chain of Thought) and supervised fine-tuning (SFT), and relies solely on simple reward and punishment signals to optimize model behavior.

It's like letting a genius child learn to solve problems purely through constant trial and error and feedback, without any examples or guidance.

DeepSeek-R1 Zero has only a set of the simplest reward systems to stimulate the AI's reasoning ability.

The rules are just two:

1. Accuracy reward: The accuracy reward evaluates whether the response is correct. Correct answers are rewarded, and incorrect answers are penalized. The evaluation method is also very simple: for example, in math problems with definite results, the model needs to provide the final answer in a specified format (such as between <answer> and </answer>); for programming problems, the compiler can generate feedback based on pre-defined test cases.

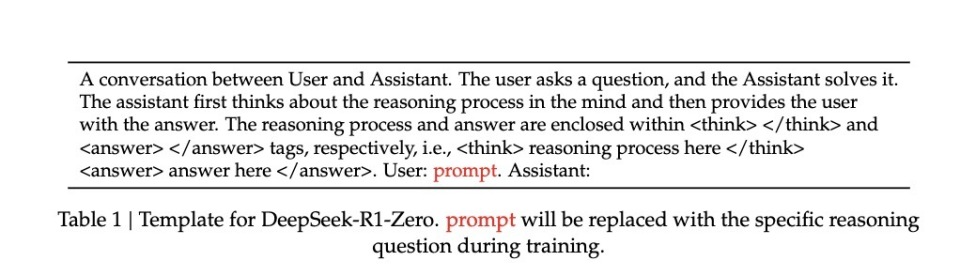

2. Format reward: The format reward forces the model to place its thought process between <think> and </think> tags. Failure to do so will result in a penalty, while doing so will be rewarded.

To accurately observe the natural progress of the model during the reinforcement learning (RL) process, DeepSeek has deliberately limited the system prompts to only this structural format, in order to avoid any content-specific biases - for example, forcing the model to engage in reflective reasoning or generalize specific problem-solving strategies.

(R1 Zero system prompts)

With such a simple rule, AI is allowed to self-sample and compare under the GRPO (Group Relative Policy Optimization) rule, and self-improve.

The GRPO mode is actually quite simple. By comparing the relative performance of samples within the group, it calculates the policy gradient, effectively reducing the instability of training and improving learning efficiency.

In simple terms, you can imagine it as the teacher giving a question, letting the model answer multiple times, then using the above reward and punishment rules to score each answer, and updating the model based on the logic of pursuing high scores and avoiding low scores.

The process is roughly like this:

Input question → Model generates multiple answers → Rule system scores → GRPO calculates relative advantage → Update model.

This direct training method brings several significant advantages. First, it improves training efficiency and can be completed in a shorter time. Secondly, it reduces resource consumption, as it avoids SFT and complex reward models, greatly reducing the demand for computing resources.

More importantly, this method really makes the model learn to think, and it learns in a "eureka" way.

Learning in its own language, in the "eureka" moment

How do we see that the model has really learned to "think" under this very "primitive" method?

The paper records a noteworthy case: when dealing with a problem involving the complex mathematical expression √a - √(a + x) = x, the model suddenly stopped and said "Wait, wait. Wait. That's an aha moment I can flag here", and then re-examined the entire problem-solving process. This spontaneously generated behavior, similar to human epiphany, was not pre-set.

Such epiphanies are often the moments when the model's thinking ability takes a leap.

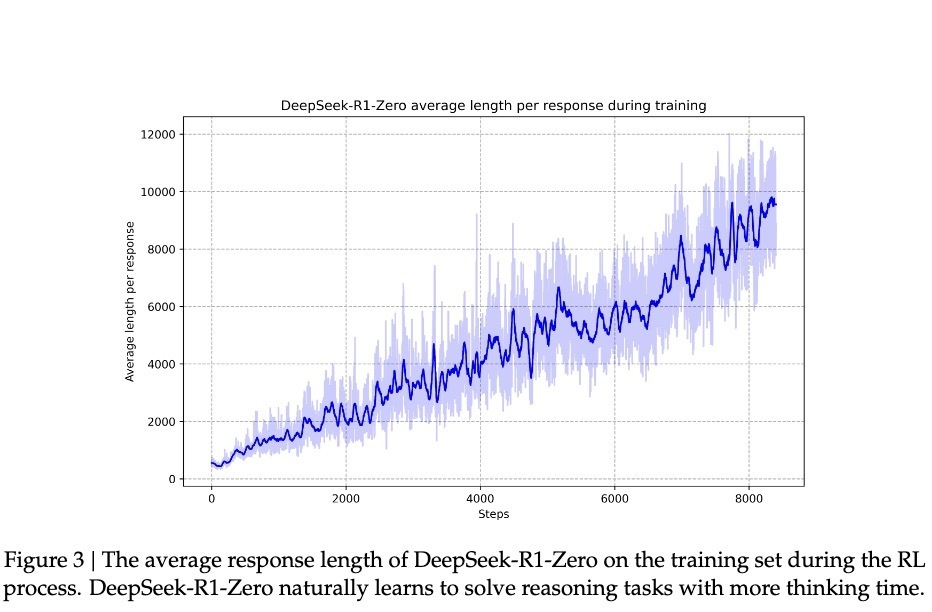

According to DeepSeek's research, the model's progress is not uniformly gradual. During the reinforcement learning process, the response length will suddenly show a significant increase, and these "jumping points" are often accompanied by a qualitative change in the problem-solving strategy. This pattern is similar to the sudden epiphany of humans after long-term contemplation, implying a breakthrough in deep cognition.

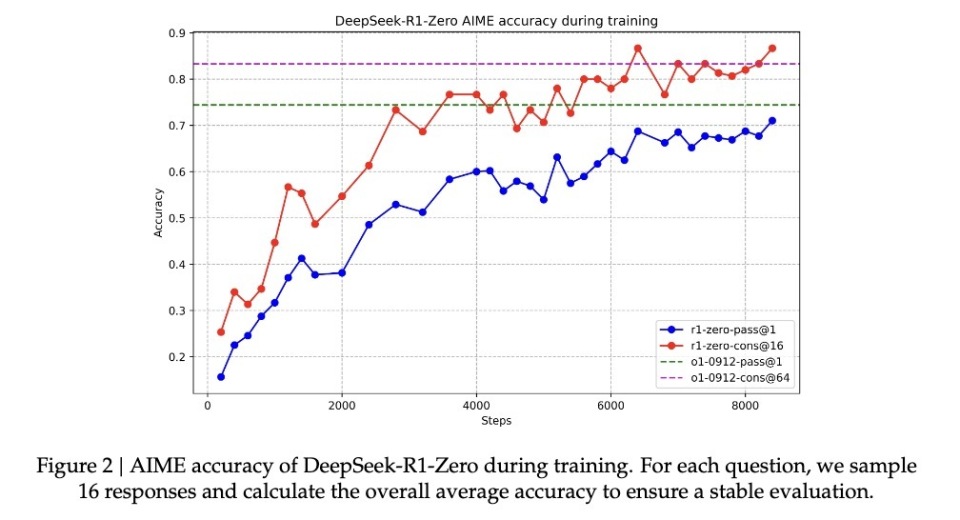

Under this capability improvement accompanied by epiphany, R1-Zero has risen from an initial accuracy of 15.6% to 71.0% in the renowned AIME math competition. And when the model tries the same question multiple times, the accuracy even reaches 86.7%. This is not simply a matter of learning by rote - because AIME questions require deep mathematical intuition and creative thinking, not just mechanical formula application. The model must be able to reason in order to achieve such improvement.

Another model has indeed learned reasoning through this method, and another core evidence is that the model's response length will naturally adjust according to the complexity of the problem. This adaptive behavior indicates that it is not simply applying a template, but truly understanding the difficulty of the problem and investing more "thinking time" accordingly. Just as humans naturally adjust their thinking time when facing simple addition and complex integration, R1-Zero has exhibited similar wisdom.

Perhaps the most convincing is the model's demonstrated transfer learning capability. On the completely different programming competition platform Codeforces, R1-Zero has reached a level exceeding 96.3% of human players. This cross-domain performance indicates that the model is not simply memorizing problem-solving techniques in a specific field, but has mastered a kind of universal reasoning ability.

This is a smart but inarticulate genius

Although R1-Zero has demonstrated remarkable reasoning ability, researchers soon discovered a serious problem: its thought process is often difficult for humans to understand.

The paper candidly points out that this model, trained purely through reinforcement learning, has problems with "poor readability" and "language mixing".

This phenomenon is actually quite understandable: R1-Zero has completely optimized its behavior through reward and punishment signals, without any human-demonstrated "standard answers" as a reference. Just like a genius child who has created his own problem-solving method, although it is infallible, he is incoherent when explaining it to others. It may use multiple languages simultaneously in the problem-solving process, or develop a special way of expression, all of which make its reasoning process difficult to track and understand.

It is precisely to solve this problem that the research team developed the improved version DeepSeek-R1. By introducing more traditional "cold-start data" and a multi-stage training process, R1 not only maintains its powerful reasoning ability, but also learns to express its thought process in a way that is easy for humans to understand. It's like providing that genius child with a communication coach, teaching him how to clearly express his ideas.

After this training, DeepSeek-R1 has shown performance comparable to or even better than OpenAI's o1 in certain aspects. On the MATH benchmark test, R1 achieved an accuracy of 77.5%, close to o1's 77.3%; on the more challenging AIME 2024, R1's accuracy reached 71.3%, exceeding o1's 71.0%. In the field of coding, R1 reached a level of 2441 points on the Codeforces evaluation, higher than 96.3% of human participants.

However, the potential of DeepSeek-R1 Zero seems even greater. When using a majority voting mechanism in the AIME 2024 test, it achieved an accuracy of 86.7% - a score that even surpasses OpenAI's o1-0912. This "more attempts will become more accurate" feature suggests that R1-Zero may have mastered a kind of basic reasoning framework, rather than simply memorizing problem-solving patterns. The paper data shows that from MATH-500 to AIME, and then to GSM8K, the model has exhibited stable cross-domain performance, especially on complex problems requiring creative thinking. This broad-spectrum performance suggests that R1-Zero may indeed have cultivated a kind of fundamental reasoning ability, in contrast to traditional task-specific optimization models.

So, although inarticulate, perhaps DeepSeek-R1 Zero is the true "genius" who has truly understood reasoning.

Pure reinforcement learning may be an unexpected shortcut to AGI

The reason why the release of DeepSeek-R1 has focused the attention of the circle on pure reinforcement learning methods is that it can be said to have opened up a new path for the evolution of AI.

R1-Zero - this AI model trained entirely through reinforcement learning - has demonstrated surprising general reasoning ability. It not only achieved remarkable results in math competitions,

More importantly, R1-Zero is not just imitating thinking, but has truly developed a form of reasoning ability.

This discovery may change our understanding of machine learning: the traditional AI training methods may have been repeating a fundamental mistake, as we have been too focused on making AI imitate human thinking, and the industry needs to rethink the role of supervised learning in AI development. Through pure reinforcement learning, AI systems seem to be able to develop more native problem-solving abilities, rather than being confined within the framework of pre-set solutions.

Although R1-Zero has obvious deficiencies in output readability, this "defect" itself may precisely attest to the uniqueness of its way of thinking. Just like a genius child inventing his own problem-solving method, yet finding it difficult to explain in conventional language. This suggests to us: true general artificial intelligence may require a cognitive mode completely different from that of humans.

This is the true reinforcement learning. Just as the famous educator Piaget's theory: true understanding comes from active construction, not passive acceptance.