Author: Zuoye

A work of art is never completed, only abandoned.

Everyone is talking about AI Agent, but what everyone is talking about is not the same thing, which leads to differences in the AI Agent we care about and the public perspective, as well as the perspective of AI practitioners.

A long time ago, I wrote Crypto is the Illusion of AI, from then until now, the combination of Crypto and AI has always been a one-way love, AI practitioners rarely mention terms like Web3/blockchain, while Crypto practitioners are deeply in love with AI, and after witnessing the amazing scene of AI Agent frameworks being tokenized, I don't know if we can truly bring AI practitioners into our world.

AI is the agent of Crypto, which is the best annotation from the perspective of encryption to view the current round of AI frenzy, Crypto's enthusiasm for AI is different from other industries, we especially hope to embed the issuance and operation of financial assets with it.

Agent Evolution, the Essence of Technology Marketing

At its root, AI Agent has at least three sources, and OpenAI's AGI (Artificial General Intelligence) lists it as an important step, making the term a popular one beyond the technical level, but essentially Agent is not a new concept, even with AI empowerment, it is difficult to say it is a revolutionary technological trend.

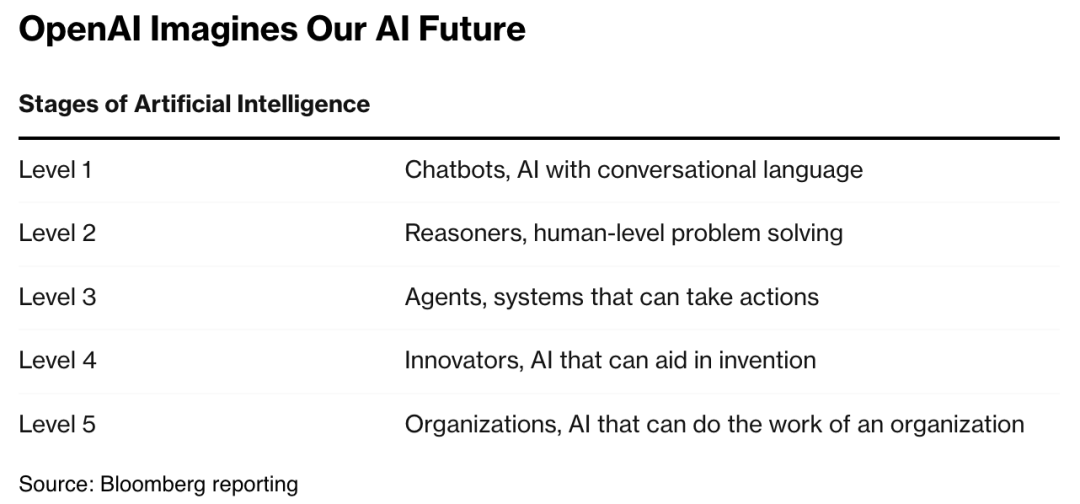

One is the AI Agent in OpenAI's eyes, similar to the L3 level of autonomous driving, AI Agent can be seen as having a certain level of advanced assisted driving capabilities, but still cannot completely replace humans.

Caption: OpenAI's planned AGI stages, image source: https://www.bloomberg.com/

Secondly, as the name implies, AI Agent is an Agent empowered by AI, the agency mechanism and model are not uncommon in the field of computer science, and under OpenAI's planning, Agent will become the L3 stage after the dialogue form (ChatGPT) and the reasoning form (various Bots), its feature is "to autonomously perform a certain behavior", or in the definition of LangChain founder Harrison Chase: "An AI Agent is a system that uses LLM to make program control flow decisions."

This is the mystery of it, before the appearance of LLM, Agent was mainly to execute the automated processes set by humans, just to give an example, when designing a crawler program, programmers will set the User-Agent to mimic the browser version, operating system and other details used by real users, of course, if using AI Agent to mimic human behavior more meticulously, then an AI Agent crawler framework will appear, which will make the crawler "more human-like".

In this evolution, the addition of AI Agent must be combined with the existing scenarios, there is almost no completely original field, even the code completion and generation capabilities of Curosr and Github copilot are further functional enhancements under the thinking of LSP (Language Server Protocol), there are many such examples:

Apple: AppleScript (Script Editor) --Alfred--Siri-- Shortcuts --Apple Intelligence

Terminal: Terminal (macOS)/Power shell(Windows)--iTerm 2--Warp(AI Native)

Human-Computer Interaction: Web 1.0 CLI TCP/IP Netscape --Web 2.0 GUI/RestAPI/Search Engine/Google/Super App --Web 3.0 AI Agent + dapp ?

To briefly explain, in the process of human-computer interaction, the combination of Web 1.0 GUI and browsers truly allowed the masses to use computers without barriers, the representative is the combination of Windows+IE, while API is the data abstraction and transmission standard behind the Internet, the browser in the Web 2.0 era has already entered the era of Chrome, and the shift to the mobile end has changed people's habits of using the Internet, the App of super platforms like WeChat and Meta covers all aspects of people's lives.

Thirdly, the concept of Intent in the Crypto field is the precursor to the explosion of AI Agent circles, but it should be noted that this is only valid within Crypto, from the functionally deficient Bitcoin Script to Ethereum smart contracts, the Agent concept itself is already widely used, and the subsequent cross-chain bridges -- chain abstraction, EOA--AA wallets are natural extensions of this thinking, so it is not surprising that after the "invasion" of AI Agent into Crypto, it is also directed towards the DeFi scenario.

This is the confusion of the AI Agent concept, in the context of Crypto, what we actually want to achieve is the Agent of "automatic finance, automatic meme subscription", but in OpenAI's definition, such a dangerous scenario even needs L4/L5 to truly realize, and the public is playing with the functions of code generation or AI one-click summarization and writing, the two parties are not communicating on the same dimension.

Understanding what we really want, the next focus is to talk about the organizational logic of AI Agent, the technical details will be hidden behind it, after all, the agency concept of AI Agent is to remove the obstacle of large-scale popularization of technology, just like the browser's Midas touch to the personal PC industry, so our focus will be on two points: looking at AI Agent from the perspective of human-computer interaction, and the difference and connection between AI Agent and LLM, in order to bring out the third part: what will the combination of Crypto and AI Agent leave behind.

let AI_Agent = LLM+API;

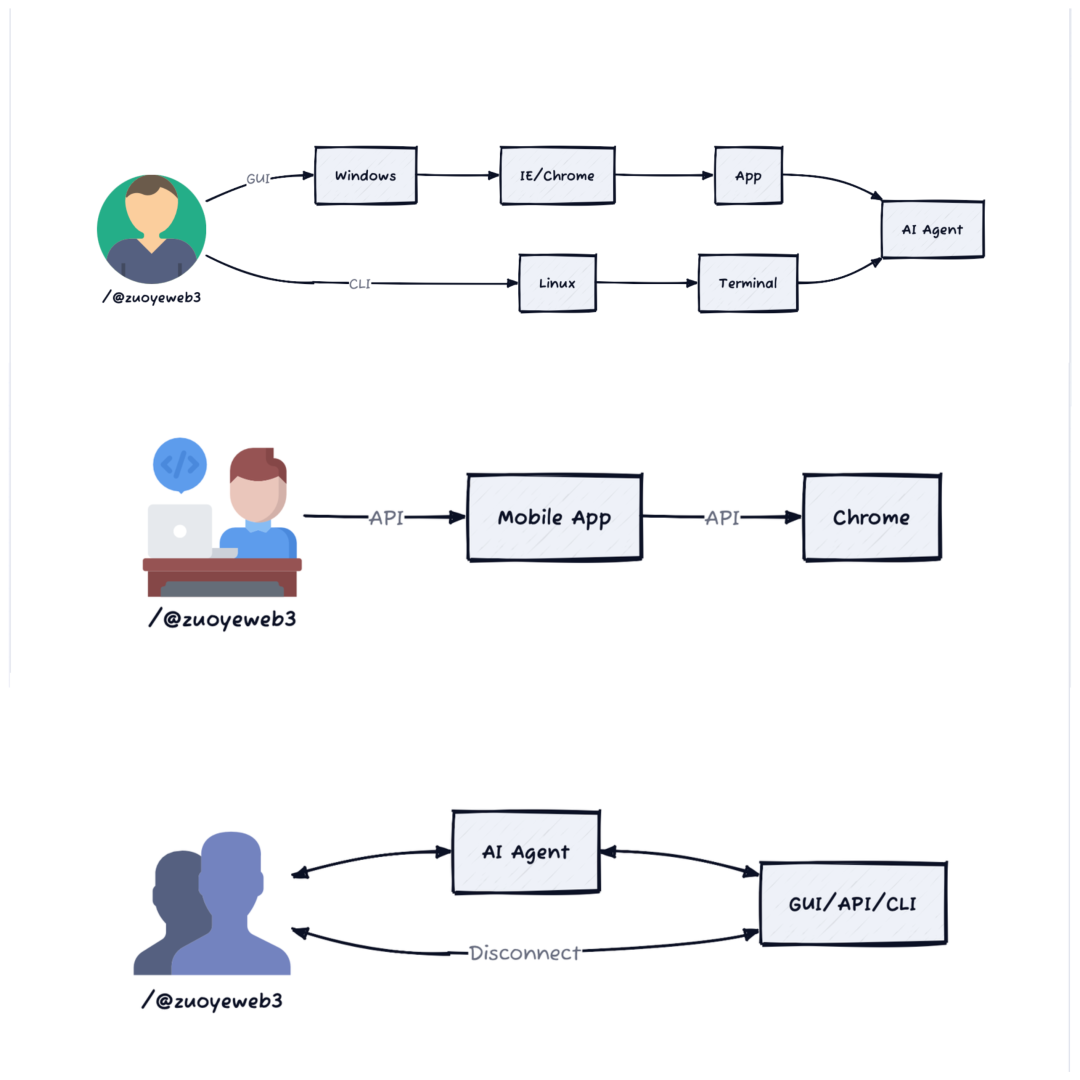

Before the chat-style human-computer interaction mode like ChatGPT, the interaction between humans and computers was mainly in the form of GUI (Graphical User Interface) and CLI (Command-Line Interface), the GUI thinking continues to evolve into various specific forms such as browsers and Apps, while the combination of CLI and Shell has changed little.

But this is only the "front-end" surface of human-computer interaction, with the development of the Internet, the increase in the amount and types of data has led to more and more "back-end" interactions between data and data, and between Apps and Apps, the two are interdependent, even a simple web browsing behavior actually requires the collaboration and coordination of the two.

If we say that the interaction between users and browsers/Apps is the user entry point, then the connection and jumping between APIs supports the actual operation of the Internet, which is also part of the Agent, ordinary users do not need to understand terms like command line and API, they can still achieve their own goals.

LLM is the same, now users can go even further, they don't even need to search, the whole process can be described in the following steps:

The user opens the chat window;

The user uses natural language, either text or voice, to describe their needs;

LLM parses it into a procedural set of operations;

LLM returns the result to the user.

It can be seen that in this process, the biggest challenge is faced by Google, because the user no longer needs to open the search engine, but various GPT-like chat windows, the entry point of traffic is quietly changing, and it is precisely because of this that some people think that this round of LLM is revolutionizing the search engine.

So what role does AI Agent play in this?

In a nutshell, AI Agent is a specialization of LLM.

The current LLM is not AGI, i.e. not the L5 organizer in OpenAI's ideal, its capabilities are greatly limited, such as easily hallucinating when fed too much user input information, one important reason for this is the training mechanism, for example, if you repeatedly tell GPT that 1+1=3, then there is a certain probability that when you ask 1+1+1=? next, the answer may be 4.

Because at this time, GPT's feedback comes entirely from the individual user, if the model is not connected to the Internet, then it is very likely that your information will change its operating mechanism, and in the future it will be a stupid GPT that only knows 1+1=3, but if you allow the model to connect to the Internet, then GPT's feedback mechanism will be more diverse, after all, on the Internet, those who think 1+1=2 occupy the vast majority.

To continue to increase the difficulty, if we must use LLM locally, how do we avoid such problems?

A simple and brutal way is to use two LLMs at the same time, and stipulate that each time a question is answered, the two LLMs must verify each other, in order to reduce the probability of errors, and if that is not enough, there are still some methods, such as letting two users process a process each time, one responsible for asking, and the other responsible for micro-adjusting the question, in order to make the language more standardized and rational.

Of course, even when connected to the internet, problems can still occur, such as LLM retrieving responses from a silly forum, which could be even worse. But avoiding such data would lead to a decrease in the available data volume, so it is entirely possible to split and reorganize the existing data, or even generate some new data based on the old data, in order to make the responses more reliable. This is the essence of Retrieval-Augmented Generation (RAG), the natural language understanding approach.

Humans and machines need to understand each other. If we let multiple LLMs understand and collaborate with each other, we are essentially touching on the operation mode of AI Agents, where human agents call upon other resources, including large models and other Agents.

Thus, we grasp the connection between LLMs and AI Agents: LLMs are a compilation of knowledge that humans can interact with through a dialogue window, but in practice, we find that certain specific task flows can be summarized as specific mini-programs, Bots, or instruction sets, which we define as Agents.

AI Agents are still part of LLMs, and the two cannot be equated, but the way AI Agents are invoked is based on LLMs, with a particular emphasis on the coordination of external programs, LLMs, and other Agents, which is why the sentiment "AI Agent = LLM + API" arises.

So, in the LLM workflow, we can add explanations of AI Agents. Let's take the example of calling the API data of X:

The human user opens the chat window;

The user uses natural language, either text or voice, to describe their needs;

The LLM parses it as an API call-type AI Agent task and transfers the dialogue authority to that Agent;

The AI Agent asks the user for their X account and API password, and communicates with X based on the user's description;

The AI Agent returns the final result to the user.

Remember the evolution of human-machine interaction? The browsers, APIs, etc. that existed in Web 1.0 and Web 2.0 will still exist, but users can completely ignore their existence and just interact with the AI Agent, and the API call process can be used through dialogue, and these API services can be of any type, including local data, online information, and data from external Apps, as long as the other party opens the interface and the user has the right to use it.

A complete AI Agent usage process is shown in the above figure, where the LLM can be seen as a separate part from the AI Agent, or as two sub-steps of a process, but in any case, it is all in service of the user's needs.

From the perspective of the human-machine interaction process, it is even like the user talking to themselves, you just need to express your thoughts and ideas freely, and the AI/LLM/AI Agent will guess your needs over and over again. The addition of feedback mechanisms and the requirement for LLM to remember the current contextual context can ensure that the AI Agent does not suddenly forget what it is doing.

In short, the AI Agent is a more personalized product, which is the essential difference from traditional scripts and automation tools, like a personal butler considering the user's real needs, but it must be pointed out that this personality is still a result of probabilistic inference, and the L3 level AI Agent does not have the human understanding and expression ability, so the connection with external APIs is full of dangers.

Monetization of AI Frameworks

The fact that AI frameworks can be monetized is an important reason why I maintain interest in Crypto. In the traditional AI technology stack, frameworks are not very important, at least not as important as data and computing power, and it is also difficult to monetize AI products from the framework, after all, most AI algorithms and model frameworks are open source products, and the really closed source is sensitive information like data.

Essentially, AI frameworks or models are a container and combination of a series of algorithms, just like an iron pot stewing a big goose, but the variety of the goose and the control of the fire are the key to the taste difference, the product being sold should be the big goose, but now Web3 customers have come, they want to buy the pot instead of the goose.

The reason is not complicated, the basic AI products of Web3 are all picking up others' leftovers, they are improving their customized products on the existing AI frameworks, algorithms and products, and even the technical principles behind the different Crypto AI frameworks are not much different, since the technology cannot be distinguished, they need to do some work on the names, application scenarios, etc., so even the slightest adjustments to the AI framework itself become the support for different tokens, thus causing the framework bubble of Crypto AI Agents.

Since there is no need to invest heavily in training data and algorithms themselves, the name differentiation method becomes particularly important, even if DeepSeek V3 is cheaper, it still needs the consumption of doctoral hair, GPU, electricity and other resources.

In a sense, this is also the consistent style of Web3 in recent times, that the token issuance platform is more valuable than the token itself, Pump.Fun/Hyperliquid are both like this, originally the Agent should be the application and asset, but the Agent issuance framework has become the hottest product.

In fact, this is also a kind of value anchoring approach, since there is no distinction between various Agents, the Agent framework becomes more stable, and can generate the value suction effect of asset issuance, this is the current 1.0 version of the combination of Crypto and AI Agent.

And the 2.0 version is emerging, a typical example is the combination of DeFi and AI Agent, the concept of DeFAI is of course a market behavior stimulated by heat, but if we consider the following situations, we will find something different:

Morpho is challenging old lending products like Aave;

Hyperliquid is replacing dYdX's on-chain derivatives, and even challenging the CEX listing effect of Binance;

Stablecoins are becoming payment tools for off-chain scenarios.

It is against the background of the evolution of DeFi that AI is improving the basic logic of DeFi. If the previous logic of DeFi was to verify the feasibility of smart contracts, then AI Agents are changing the manufacturing logic of DeFi, you don't need to understand DeFi to create DeFi products, this is a further abstraction of the underlying empowerment than the chain.

The era of everyone being a programmer is coming, complex computations can be outsourced to the LLM and APIs behind the AI Agent, and individuals only need to focus on their own ideas, with natural language being efficiently converted into programming logic.

Conclusion

This article does not mention any Crypto AI Agent tokens and frameworks, because Cookie.Fun has already done a good enough job, as an AI Agent information aggregation and token discovery platform, and then the AI Agent framework, and finally the fleeting Agent tokens, continuing to list information in the text is of no value.

But in the observation of this period, the market still lacks a real discussion of what Crypto AI Agents are ultimately pointing to, we cannot always be discussing pointers, the essence is the change of memory.

And it is the endless ability to securitize various underlying assets that is the charm of Crypto.